Dynamic Dispatch: From Classic OOP to Modern AI

Exploring how Python, ML frameworks, and agent systems leverage runtime method resolution

When we talk about AI today, we think of neural networks, transformers, and agents that reason and act. But behind the scenes, the software engineering that makes these systems work often relies on classic object-oriented programming techniques like the Virtual Method Table (VMT), a mechanism for flexible method dispatch and polymorphism.

From an engineering perspective, it’s fascinating to see how this concept scales. VMTs and Python’s dynamic dispatch form the backbone of how ML frameworks like PyTorch and TensorFlow route computations, and how AI agents dynamically decide which tools to execute.

Let’s understand these concepts with practical code examples.

What is a Virtual Method Table?

A Virtual Method Table (VMT), also known as a virtual function table or vtable, is a mechanism predominantly used in object-oriented programming languages such as C++ to implement dynamic dispatch or runtime polymorphism.

Its primary purpose is to resolve calls to virtual functions at runtime, rather than at compile time.

Key characteristics of VMTs include:

Dynamic Dispatch: Enables selecting and invoking the appropriate method implementation at runtime based on an object’s actual type rather than its declared type.

Polymorphism: Allows objects from different classes to be treated as instances of a common base class, letting a single interface represent multiple underlying behaviors.

Modularity and Extensibility: Supports modular design by making it easy to introduce new behaviors through derived classes, helping systems evolve without major refactoring.

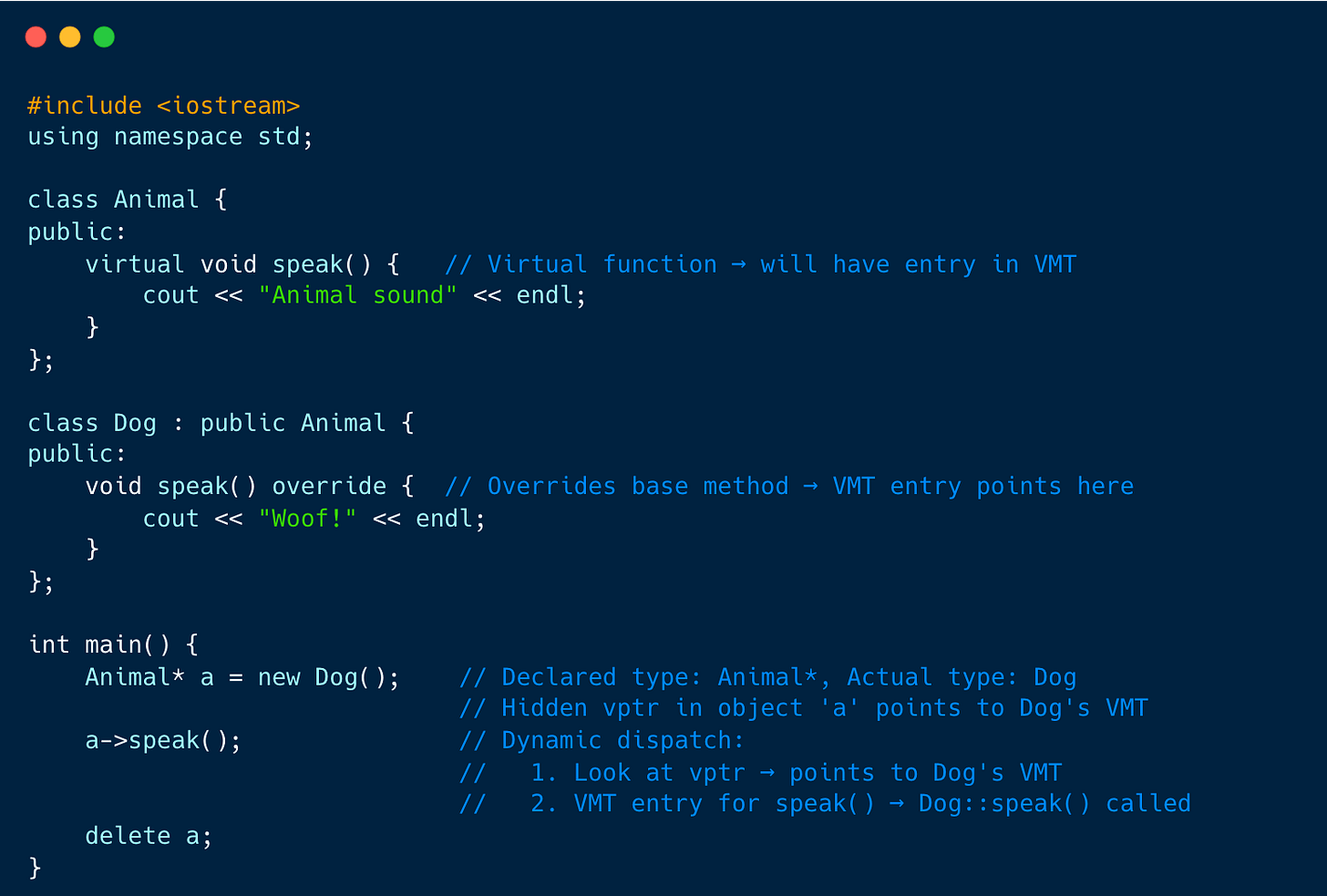

Lets try to understand with C++ code example.

What’s Happening 👓

VMT generation: Both

AnimalandDogclass have their own VMTs (arrays of pointers to virtual functions).vptr: Each object stores a hidden pointer (

vptr) to its class’s VMT.Dynamic dispatch: When

a->speak()is called, the program looks ata’s vptr to find the correct function in the VMT. Becauseapoints to aDogobject,Dog::speak()is executed, notAnimal::speak().

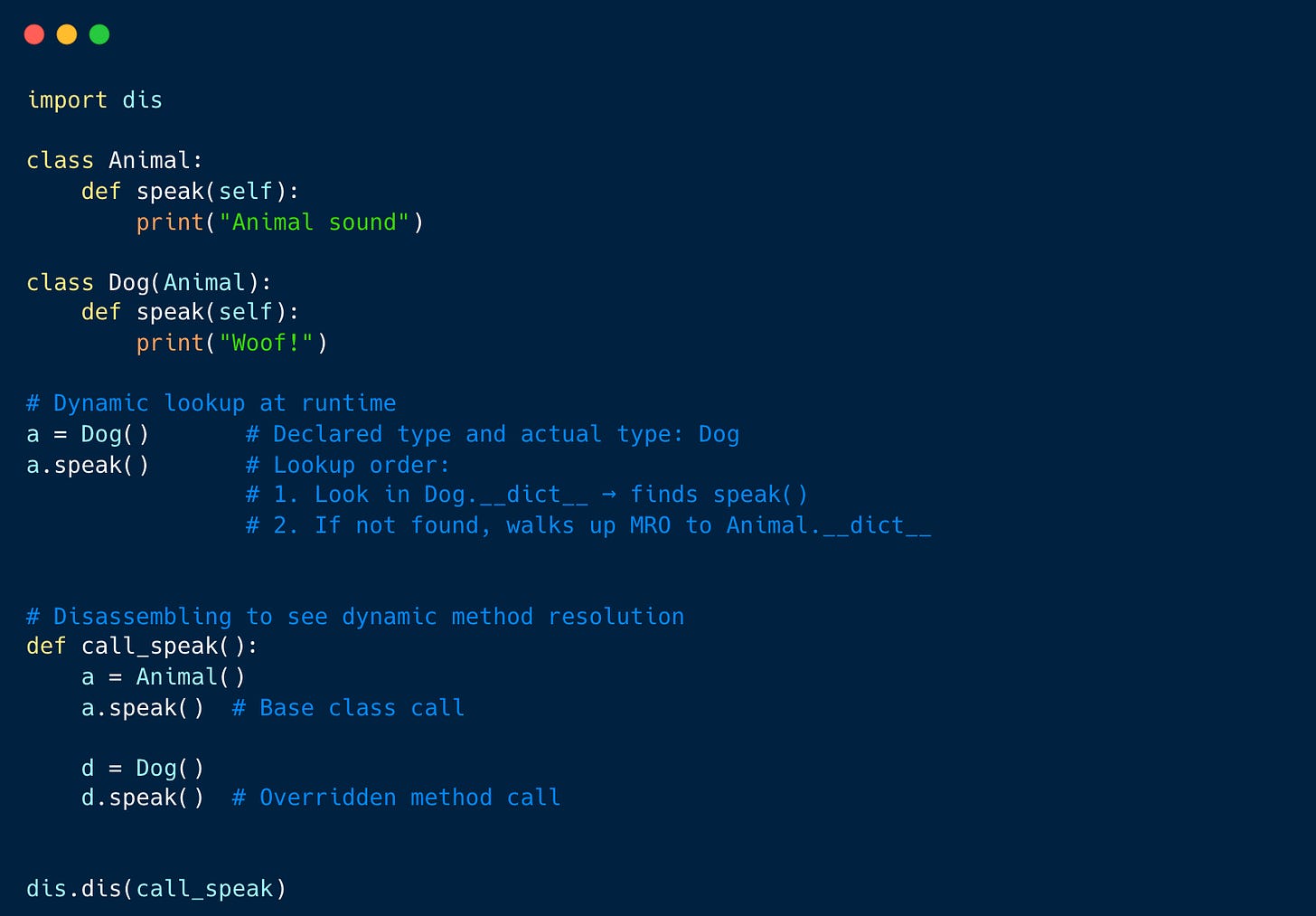

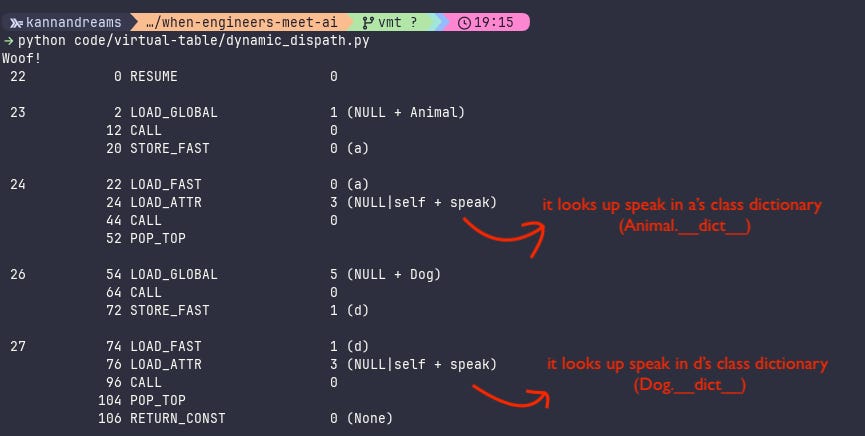

Python: Dynamic Dispatch Without VTables

Python doesn’t have a fixed vtable like C++. Instead, it achieves the same effect dynamically using dictionaries and the class hierarchy.

Every class in Python has a __dict__ , a mapping of method names to function objects. When you call a method, Python looks it up dynamically:

When you run d.speak(), Python:

Checks

Dog.__dict__forspeak().If not found, walks up the Method Resolution Order (MRO) to

Animal.Disassembling the Method Call (Bytecode Proof)

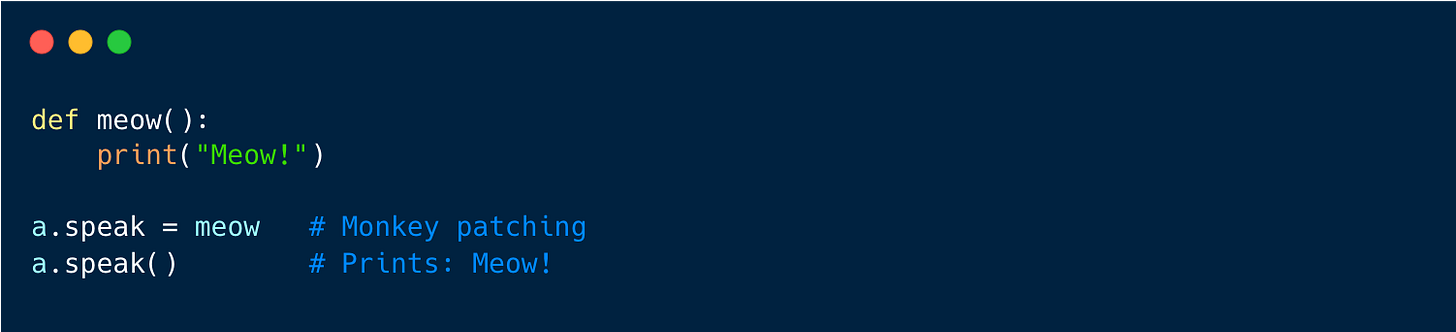

This lookup chain is Python’s virtual dispatch mechanism. It’s slower than a C++ vtable but infinitely more flexible, you can even replace methods at runtime (a.k.a. monkey patching).

How ML Frameworks use Virtual Dispatch

If you thought VMTs and dynamic method lookup were just for C++ or Python classes, No, Modern ML frameworks like PyTorch and TensorFlow rely on the same core principle of virtual dispatch, they just use it at a much larger, dynamic scale.

For example, in PyTorch we have:

Models as classes = usually subclassing

nn.Module(PyTorch)Forward methods = Each models define computation

Dynamic dispatch = ensures the right forward method is called, without the training loop caring about the specific model.

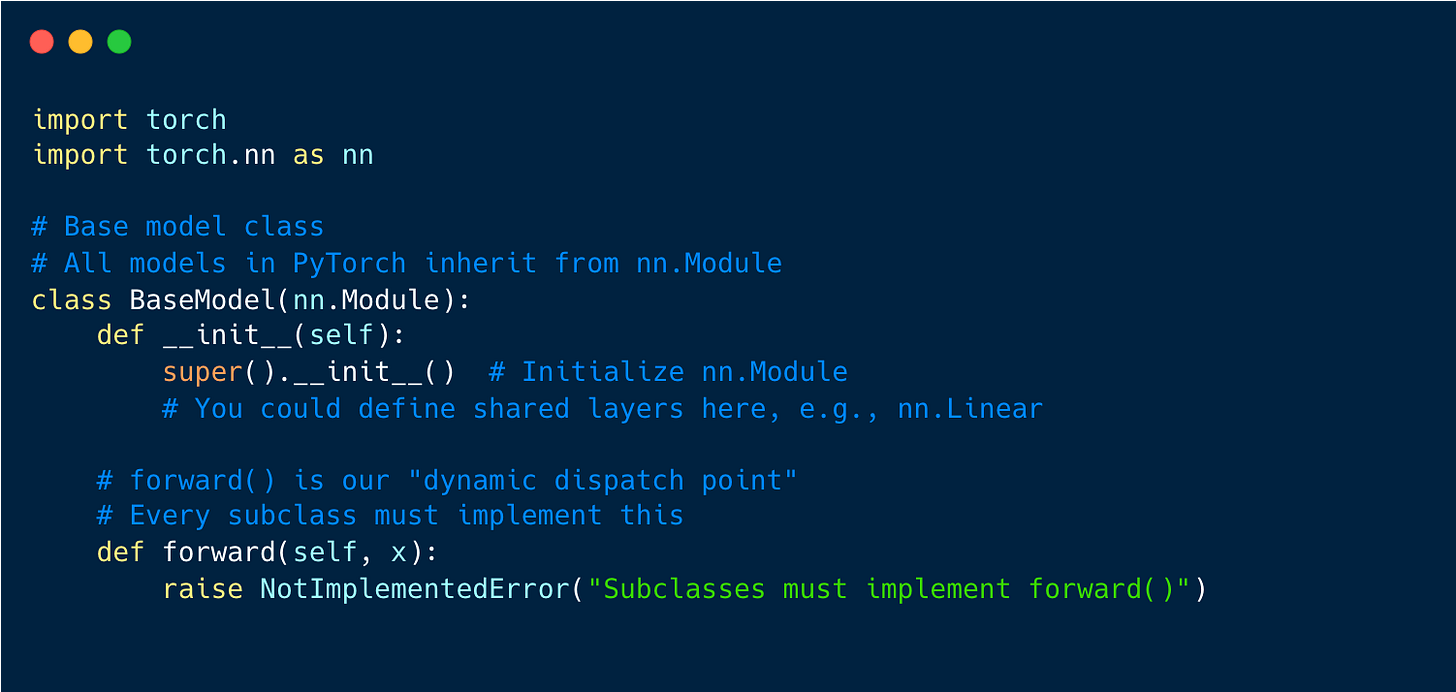

Base Class

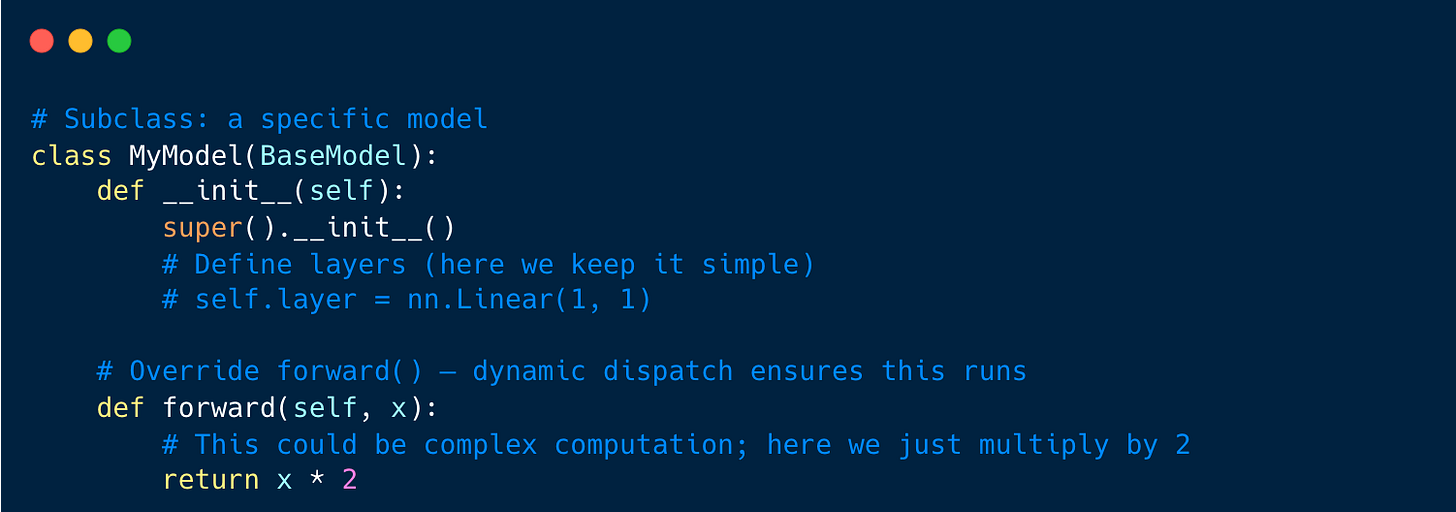

Subclass (Specific Model)

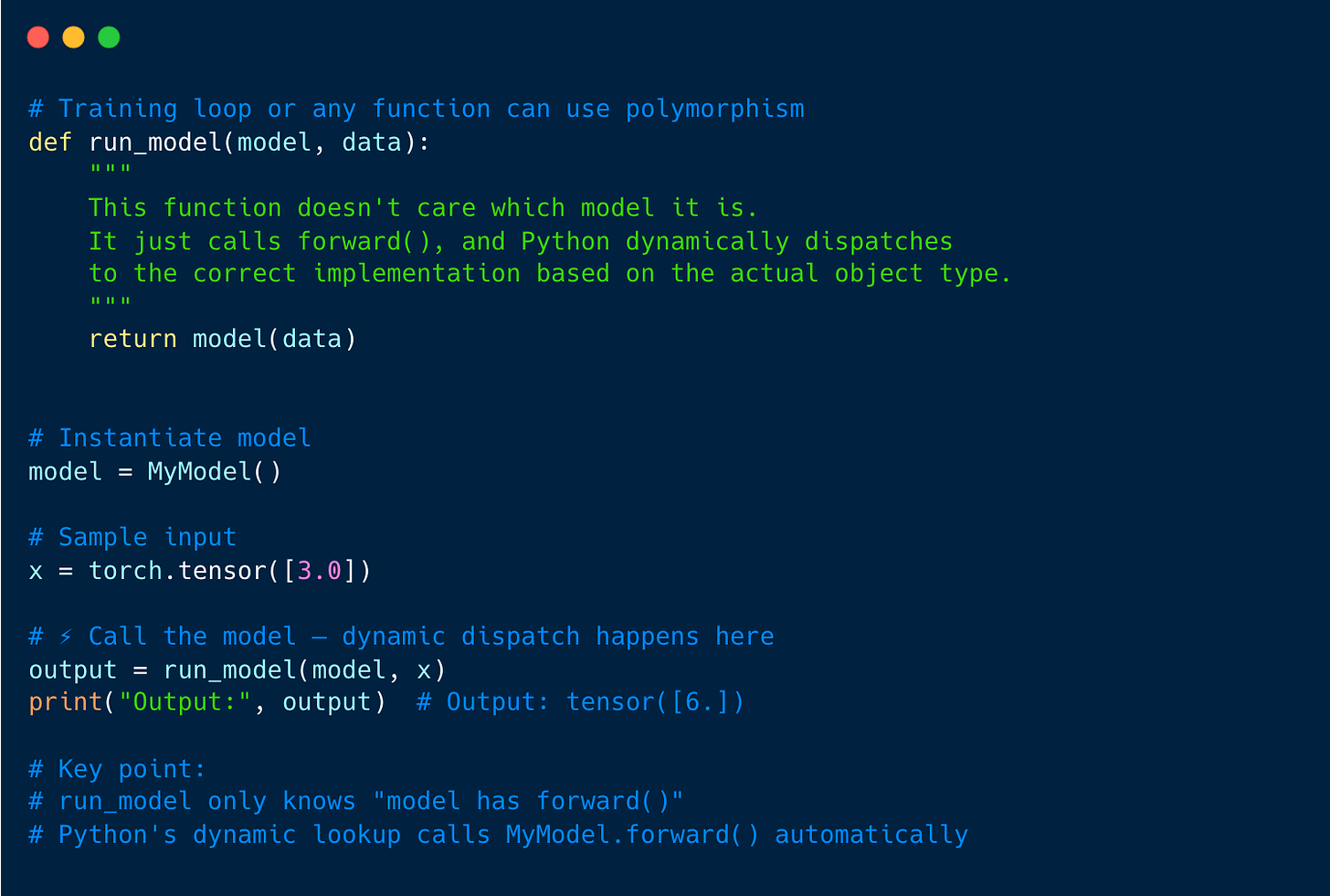

Training Loop (Using Polymorphism)

What’s Happening 👓

Model object (

MyModel) contains a reference to its class.Call to

model(x)triggers Python’s__call__onnn.Module, which internally callsself.forward(x).Dynamic dispatch looks in

MyModel.__dict__forforward(). If it weren’t found, it would walk the MRO up toBaseModel.Training loop doesn’t care what the model is, it just uses

forward().

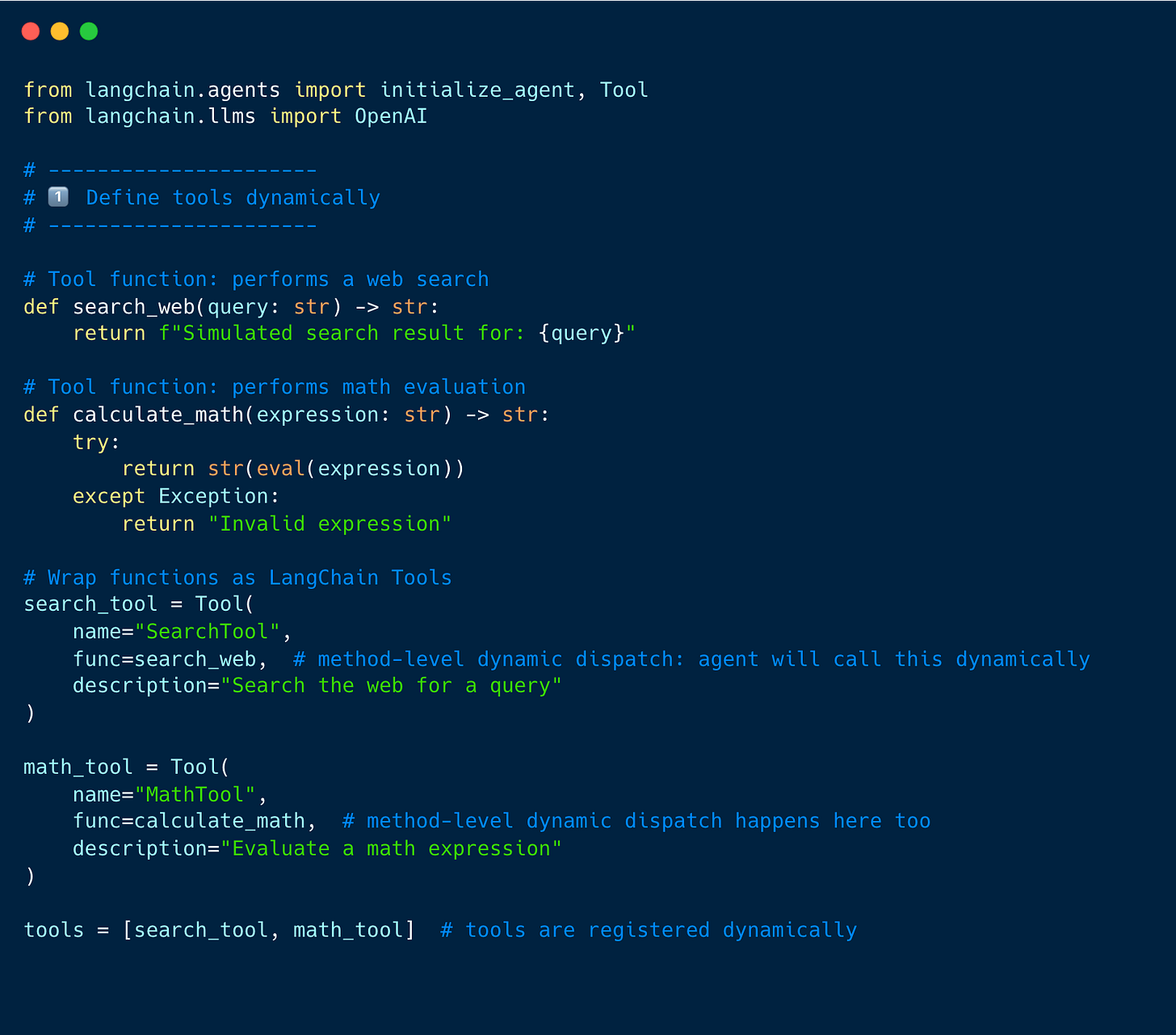

How AI Agent Frameworks apply Dynamic Dispatch

We started by looking at VTables in C++, then saw how Python uses dynamic dispatch instead of rigid tables ( “rigid table” here refers to how C++ implements polymorphism through a fixed, precomputed structure ). We also explored ML frameworks like PyTorch, where dynamic dispatch lets the training loop call forward() without knowing the exact model.

Now, the same principle is applied in AI agent frameworks like LangChain, LlamaIndex, and OpenAI agents, extend this idea one step further.

Interface guarantee: Each tool provides a known interface (e.g., a

run()method).Dynamic method dispatch: When the agent calls

tool.run(), Python dynamically dispatches to the correct implementation just like it does for a model’sforward()method.Intelligent tool selection: Unlike a simple loop over tools, the agent can decide at runtime which tool to invoke based on the input. For example, it can pick the

MathToolfor“2 + 2”andSearchToolfor“Python AI frameworks”. ( check below tool example )

AI frameworks rely heavily on runtime polymorphism allowing new behavior to be attached to existing classes dynamically.

So the agent framework is essentially doing two layers of dynamic dispatch:

Method-level dispatch: Python ensures

run()of the correct tool is called.Tool-selection dispatch: The agent’s reasoning logic decides which tool to use without needing to know in advance.

The agent doesn’t need to know the exact tool ahead of time, but Python’s dynamic dispatch ensures the correct method is executed once the agent picks the tool.

1. Tool Definitions (Base + Subclass Analogy)

This part defines the base interface (

tool) and specific tools (SearchTool,MathTool).Python dynamic dispatch ensures that

func()calls the correct implementation when invoked.Analogous to base class + subclass in OOP.

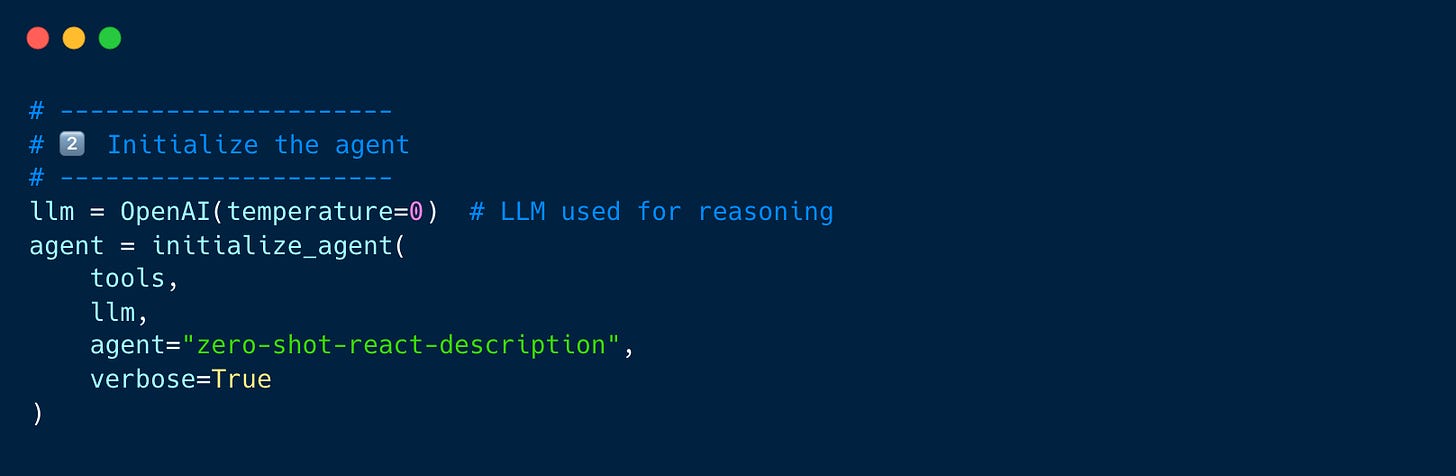

2. Agent Initialization

This part is like the training loop in ML: it doesn’t know the specifics of each tool.

The agent is ready to dynamically pick a tool at runtime based on input.

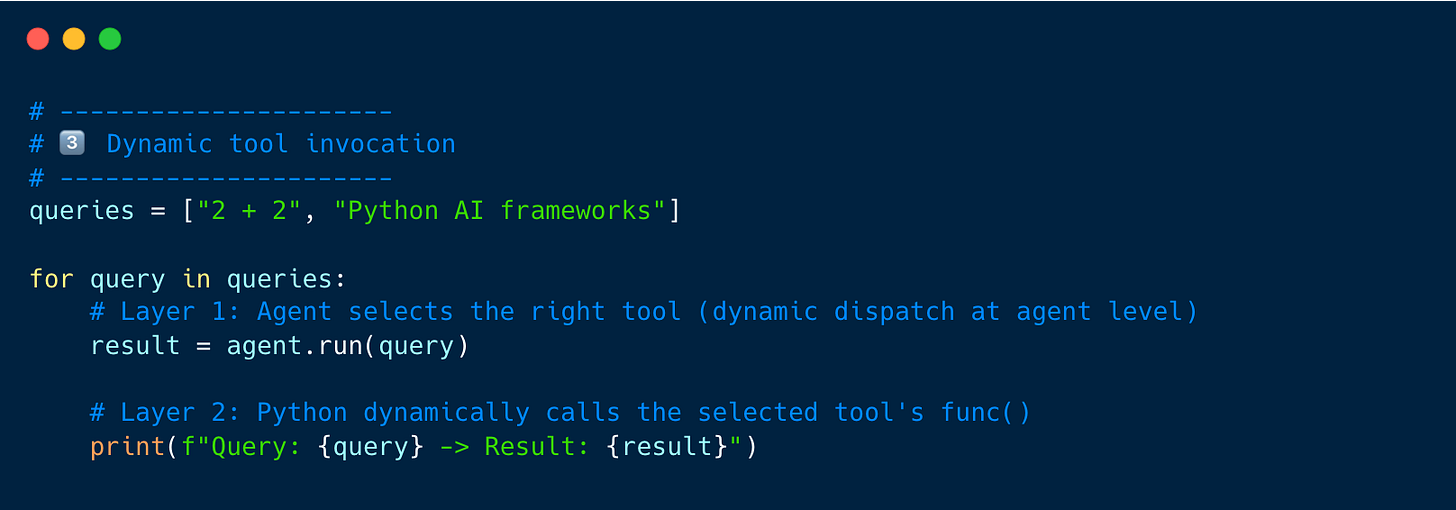

3. Dynamic Tool Invocation (Runtime Dispatch)

Layer 1 (Tool Selection): Agent decides which tool to call based on the query.

Layer 2 (Method Dispatch): Python dynamically calls the correct

func()of the chosen tool.This mirrors the ML model forward() dispatch and the VTable example: flexible, runtime method resolution.

Why Dynamic Dispatch Matters in AI:

Hardware Abstraction: AI frameworks like TensorFlow or PyTorch can automatically choose the best code for the hardware you have. For example, a matrix multiplication will run on a GPU if available, or fall back to the CPU otherwise.

Polymorphism: Different types of layers (like ConvolutionalLayer, ReLULayer, DropoutLayer) can each have their own

forward()andbackward()methods. Dynamic dispatch makes sure the right method runs for each layer at runtime.Flexibility: AI frameworks need to support many models, layers, and optimizers. Dynamic dispatch lets developers add new features, like a custom activation function, without changing the core code.

🤖 AI runs on compute. I run on coffee. Fuel the next post if you enjoyed this one!

Further Readings

https://legacy.python.org/workshops/1998-11/proceedings/papers/lowis/lowis.html

https://python.langchain.com/docs/how_to/callbacks_custom_events/

Thanks for writing this, it realy clarified a lot for me about the fundamental connections. I particularly liked your insight that "From an engineering perspective, it’s fascinating to see how this concept scales" because it highlights the deep, often invisible, layers connecting classic computer science to modern AI. It makes you consider how these robust, foundational engineering principles from OOP continue to be the silent workhorses enabling the most complex and cutting-edge AI systems we deploy today.