Python 3.14’s No-GIL Explained and Performance Analysis

From threads basics to a real benchmark comparing single-threaded and no-GIL performance.

Recently, social media like LinkedIn and X have been flooded with posts about Python 3.14 and most of them are release notes summary.

I don’t want this article to be another one of those. Instead, let’s focus on one groundbreaking concept that has the entire Python community excited.

So, what’s the big deal?

Python 3.14 marks a historic milestone that we can run Python without the Global Interpreter Lock (GIL).

What does it means?

🔥 True parallel execution across multiple CPU cores

⚡ Faster multi-threaded performance

🚀 Advantages in data science, AI, and data engineering workloads.

Let’s break down what this really means starting from the basics to benchmark comparison and visualize it.

Understanding Threads and Concurrency

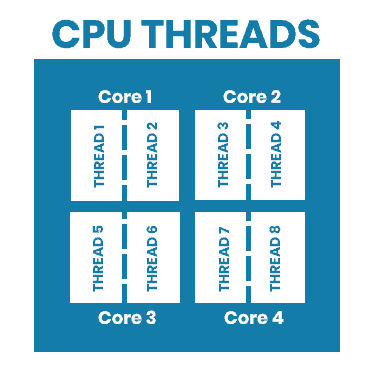

In computing, a thread is simply a sequence of instructions that a processor can run independently. A single Python process can have multiple threads, each doing some task like reading files, making network calls, or crunching numbers.

Think of a program like a recipe. Some steps can happen at the same time . For example, boiling water while chopping vegetables. Each of those simultaneous activities represents a thread. Threads allow a program to handle multiple tasks at once.

This ability to have different parts of a program run independently is called concurrency.

It’s often achieved through multithreading, where a single program creates several threads that appear to run at the same time. True parallelism happens only when multiple processor cores execute threads simultaneously.

However, even on a single core, a computer can rapidly switch between threads creating the illusion of parallel execution and making programs feel faster and more responsive.

The Global Interpreter Lock (GIL)

For many years, Python’s primary implementation, CPython, has included a mechanism known as the Global Interpreter Lock (GIL). The GIL is a mutex (a type of lock) that protects access to Python objects, ensuring that only one thread can execute Python bytecode at any given time, even on multi-core processors.

Why the GIL Exists?

The GIL was originally added to Python to make memory management easier and to prevent race conditions, situations where multiple threads try to change the same data at once. Without the GIL, CPython’s memory handling would be much more complicated and prone to deadlocks or data corruption. At the time, most Python programs were single-threaded, so the GIL made the interpreter simpler, safer, and easier to maintain.

How the GIL Works

When a Python program runs multiple threads, the GIL ensures that only one thread can execute Python bytecode at a time. Before running, a thread must first acquire the GIL. After executing a set number of operations or performing an I/O task, it releases the lock so another thread can take over. As a result, even on a multi-core CPU, only one Python thread can run Python code at any moment and the rest are simply waiting for their turn.

Performance Implications

CPU-bound tasks

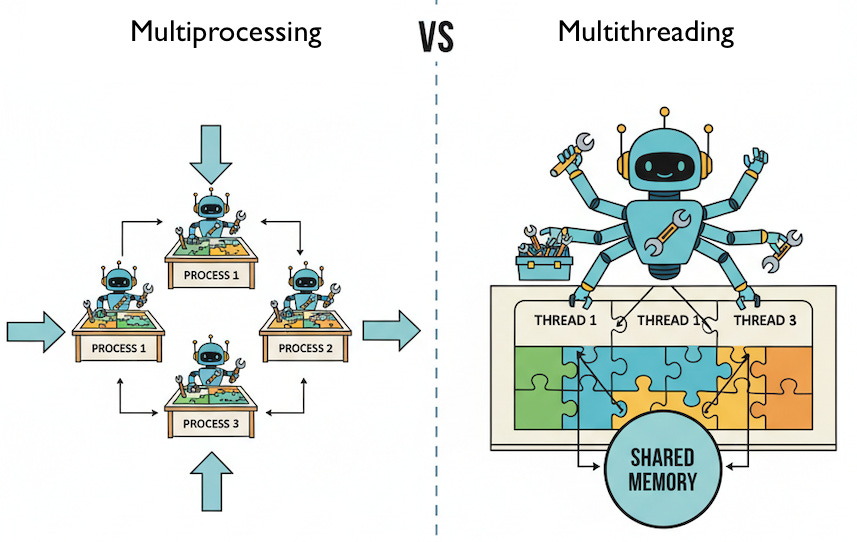

The GIL greatly affects Python’s performance, especially for CPU-bound tasks, those that spend most of their time doing calculations rather than waiting for I/O. In these cases, Python’s multithreading doesn’t achieve true parallel execution across multiple CPU cores. Instead, threads compete for the GIL, and the constant switching between them can actually make a program slower than a single-threaded one due to context-switching overhead.

I/O-bound tasks

On the other hand, for I/O-bound tasks such as network requests or file reads, the GIL’s impact is much smaller. When a thread performs an I/O operation, it usually releases the GIL, allowing other threads to run in the meantime. This makes it possible to achieve good concurrency for I/O-heavy workloads, even with the GIL in place.

Python 3.14: (No-GIL) Python

After years of R&D, Python 3.14 marks a pivotal moment in Python’s history: the free-threaded (No-GIL) version of the Python interpreter.

PEP 703 – Making the Global Interpreter Lock Optional in CPython

PEP 779 – Criteria for supported status for free-threaded Python

This means that developers can now officially leverage Python without the constraints of the Global Interpreter Lock, opening up new possibilities for true parallel execution in multithreaded Python applications.

CPython’s internal architecture has undergone major changes, particularly in memory management and object safety, to enable thread-safe execution without depending on a global lock.

True Parallelism: CPU-bound tasks can finally benefit from multiple cores, potentially leading to significant speedups.

Simplified Concurrency Models: Developers no longer need to rely on multiprocessing (which bypasses the GIL by running separate Python processes) for CPU-bound parallelism, simplifying application design.

Improved Responsiveness: Applications can remain more responsive, as long-running computations in one thread won’t block other threads from executing.

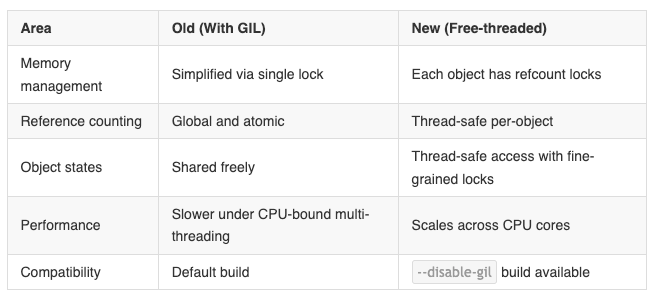

Architecture Changes:

Benchmark Comparison

Prime Number computation

Let’s look at a classic CPU-bound problem, prime number computation.

This task involves heavy mathematical operations and minimal I/O, making it ideal for testing true parallel execution.

My mac configuration: macOS Sequoia arm64 | Mac mini ( M1, 2020 ) | 16 GB Mem.

Benchmark code : https://github.com/kannandreams/when-engineers-meet-ai/tree/main/code/python-gil

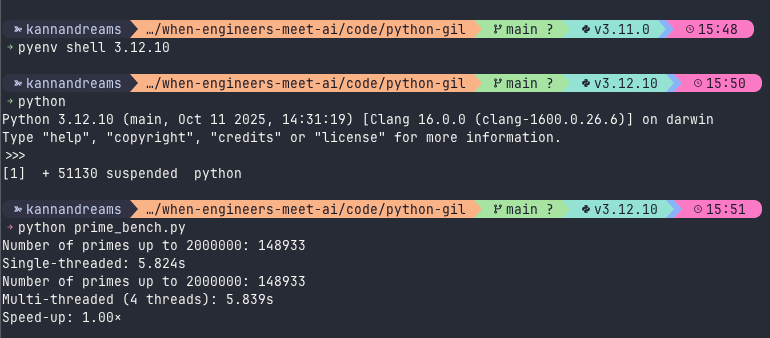

Python == 3.12.10 (with GIL)

Even though I launched 4 threads, the total execution time is almost the same as the single-threaded version. That’s because of the GIL

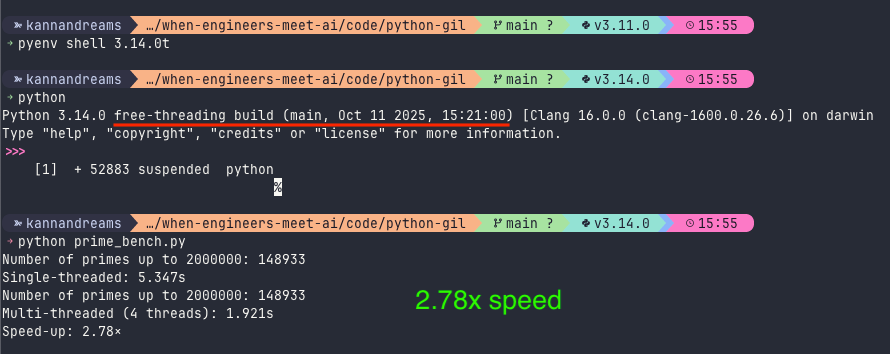

Python == 3.14 (no-GIL)

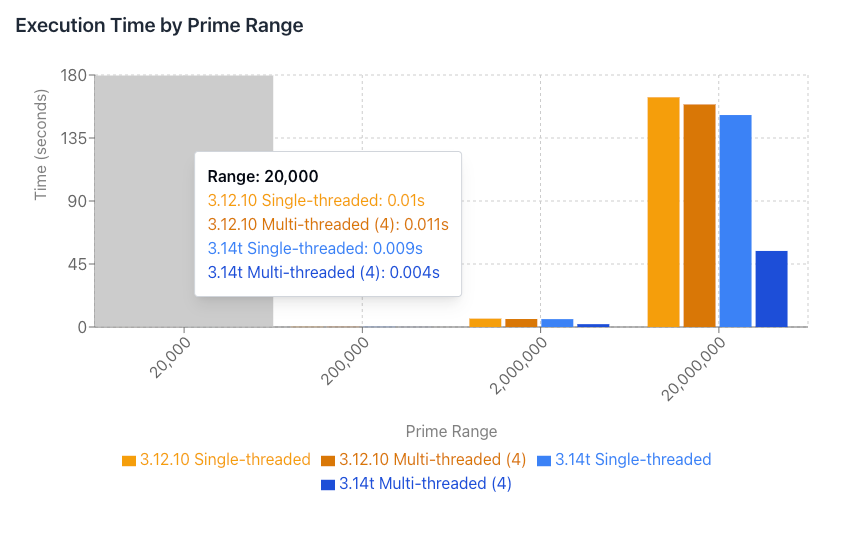

The below chart shows

Python 3.14t shows significant multi-threading performance gains, with 4-thread execution being 2.8x faster at the largest range.

Python 3.12.10 shows minimal benefit from multi-threading, likely due to GIL limitations, with multi-threaded execution being slightly slower.

Python 3.14t is consistently faster in both single and multi-threaded scenarios, with the performance gap widening significantly with multi-threading.

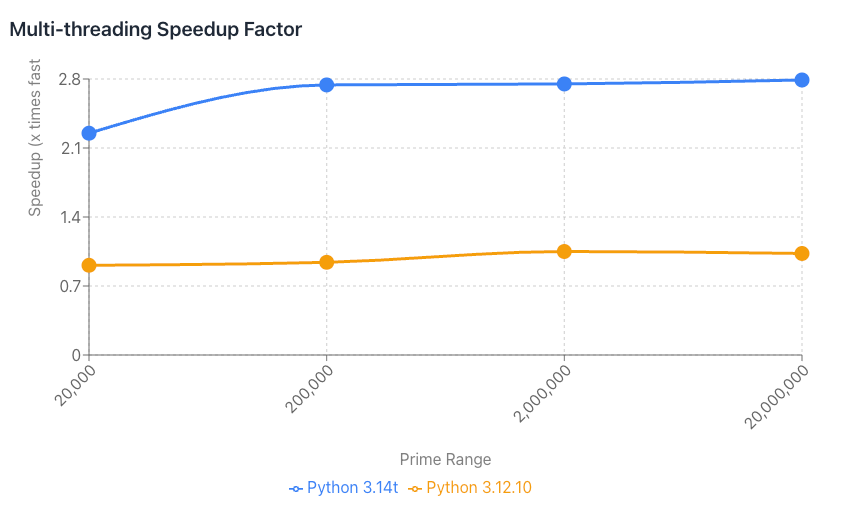

The below chart shows how much faster multi-threaded (4 threads) execution is compared to single-threaded execution.

Python 3.14t: Shows increasing speedup with larger ranges, achieving ~2.8x speedup at 20M primes

Python 3.12.10: Shows speedup close to 1.0x (no improvement), indicating GIL prevents effective parallelization

I am interested in exploring the pytest parallel to see if the test speed has improved with 3.13 vs 3.14.

Thanks for the detailed explanation.

Nice summary! I am excited about the multi-thread feature.