We are now seeing the raise of software agents in various forms, including Manus AI, Claude code, and Perplexity. These agents do more than answer questions; they actively perform tasks for the user. They achieve this by converting natural language prompts into intelligent workflows and managing complex task orchestration in the background.

My recent analysis of how Manus AI operates for a Resume Ranking use case is what led me to explore a broader architectural pattern behind software agents.

By observing its step-by-step execution from prompt ingestion to final output generated. I tried to reverse engineering on how it functions as an agent inside a sandboxed virtual machine.

Perceive, Plan, Perform

Abstract level system framework for software agents that can be common, phased, act as interface class and function regardless of their intelligence detail, specific tools or domains.

I’ve adopted the 3P Architecture ( Perceive, Plan, Perform ) a model inspired by robotics and autonomous system design. It provides a clean, modular way to describe how software agents should understand, reason, and act.

I see the 3P Architecture as analogous to the ETL pattern in Data Engineering, both break complex processes into distinct phases and integrate seamlessly with data, infrastructure, and tools. Just like ETL can be implemented using various technologies, the 3P model provides a flexible framework that’s agnostic to the underlying reasoning engine or execution platform.

In this post, I will dive into the technical architecture, break down the key components and apply the 3P model using Manus AI's resume ranking use case as a example.

Before diving into the details, let’s first define my view on what a software agent is and explore its core components.

Software Agent

A Software Agent is a self-contained, autonomous service that can understand goals, plan tasks, and execute actions often powered by a reasoning engine.

While many agents today rely on LLMs that can think, these are merely the today’s generation of reasoning engines. In the future, they may be replaced or augmented by more specialized systems such as symbolic planners, multi-modal models, or hybrid architectures. In fact, this evolution is already underway.

That’s why referring as LLM Agents ties the concept too tightly to a specific implementation detail.

LLM Agent ties the architecture to an implementation detail.

Software Agent preserves the general system design.

Software Agent is a technology-agnostic abstraction it reflects the architecture, not the engine.

It's an engineering construct that can be packaged, deployed, scaled, and monitored just like any modern software service.

Think of it like a web server, you don’t call it “Nginx Server” unless implementation specifics are relevant.

This abstraction allows for flexibility and evolve in how intelligence is implemented, while preserving a interface hierarchy.

Intelligence Engine: The agent’s “brain” that responsible for understanding goals, making decisions, and generating plans. Often powered by an LLM today but not limited to it.

Tools: The agent’s “ability”. Tools give the agent the power to interact with the external world. These can include API calls, file operations, shell commands, browser interactions, and more.

Memory : A persistent or temporary store of context, past actions, and observations. Memory enables long-term reasoning, personalization, and continuity across tasks. It can be structured and stored (e.g., vector DBs, key-value stores)

Environment: The execution layer where the agent operates such as a containerized sandbox, virtual machine, browser runtime, or remote shell.

It ensures secure, isolated, and observable execution of the agent’s actions.

The 3P Technical Architecture

I’ll walk through the architecture concepts using the Manus AI recruitment resume ranking use case as an example.

1. Perceive : Sensing the User's World

In robotics, the Perceive phase involves sensing the physical world with sensors. For a software agent, the “environment” is the user's digital context, and its “sensors” are the algorithms that process language and data.

This phase is the crucial first step where the agent gathers all relevant information from the user's prompt and the broader operational context.

This process can be broken down into two main activities:

Information Gathering (Sensing): The agent first ingests the raw inputs. This includes:

The User Prompt: The natural language request from the user.

The Broader Context: Incorporating historical interactions, user preferences, system state, and available data (e.g., the contents of files uploaded in a directory) to build a complete picture.

Information Processing (Understanding): Once the data is gathered, the agent uses Natural Language Understanding (NLU) to interpret it. The goal is to determine the user's underlying intent and extract key details. This involves:

Intent Recognition: Identifying the high-level goal (e.g., "rank candidates").

Entity & Keyword Extraction: Pulling out specific details like "senior developer", "Python”, "top 3"

The output of the “Perceive” phase is a rich, structured data object (often a JSON) that represents the user's machine-actionable goal.

2. Plan : Create Blueprint for Action

If the “Perceive” phase is the agent's eyes and ears, the “Plan” phase is its strategic brain. In this phase, the agent transforms the structured intent from the previous phase into a detailed, executable strategy.

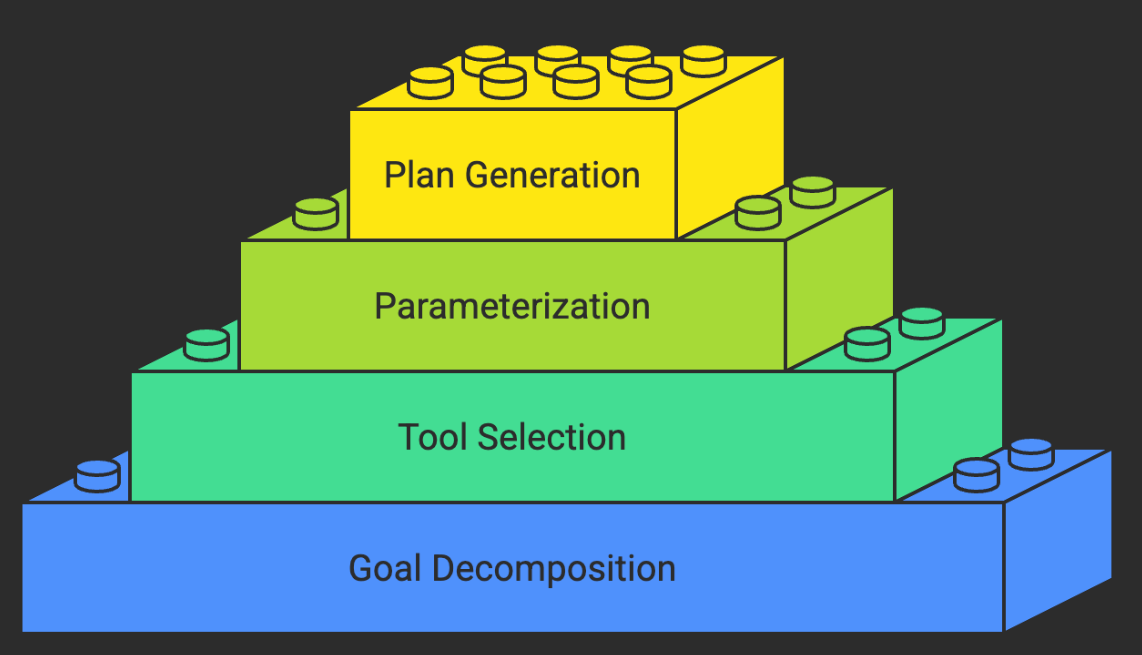

The core output of this phase is a step-by-step blueprint, which in the Manus AI example, is generated as human-readable TODO.md file.

# Candidate Analysis for RL Algorithm Engineer Position

## Tasks

- [x] Extract resumes from ZIP file

- [x] Create Excel template with appropriate columns

- [x] Extract text from all resume PDFs

- [x] Extract detailed candidate information from each resume

- [x] Create analysis templates for each resume

- [x] Begin detailed analysis with resume 1

- [x] Complete analysis for all 20 resumes

- [x] Summarize project experiences for each candidate

- [x] Evaluate RL expertise for each candidate

- [x] Add all candidate information to Excel summary

- [x] Rank candidates based on RL expertise

- [x] Finalize Excel file with complete information

- [x] Deliver Excel summary to userThe planning process involves several key activities:

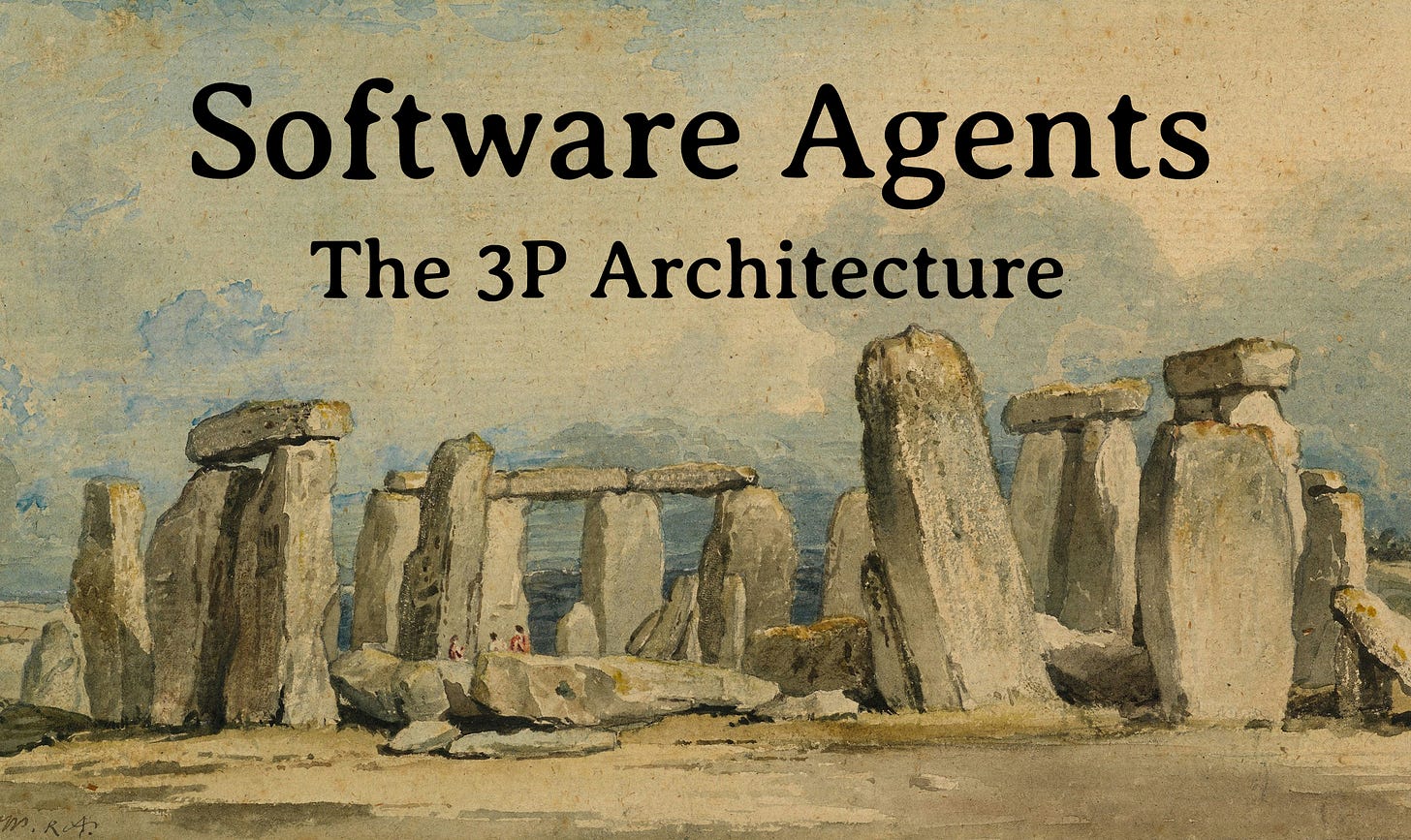

Goal Decomposition: The agent breaks down the high-level user goal into a logical sequence of smaller, manageable sub-tasks. This often happens recursively, refining a broad task like “rank candidates” into granular steps like “list files in directory → read each file → extract skills → score resumes."

Tool Selection: For each sub-task, the agent intelligently selects the most appropriate tool from its available capabilities (often managed in a central "tool registry"). This is a critical decision-making step, matching a required function (e.g., "search the web," "read a local file," "call an API") to a specific tool.

Parameterization: Dynamically mapping relevant information from the extracted details and context to populate the parameters of the selected tool calls ( like API request parameters). This ensures correctness in execution.

Plan Generation & Orchestration: Finally, the agent assembles these prepared tool calls into a plan defines the logical flow, dependencies between tasks (e.g., Step 2 can't run until Step 1 is complete), and opportunities for parallel execution.

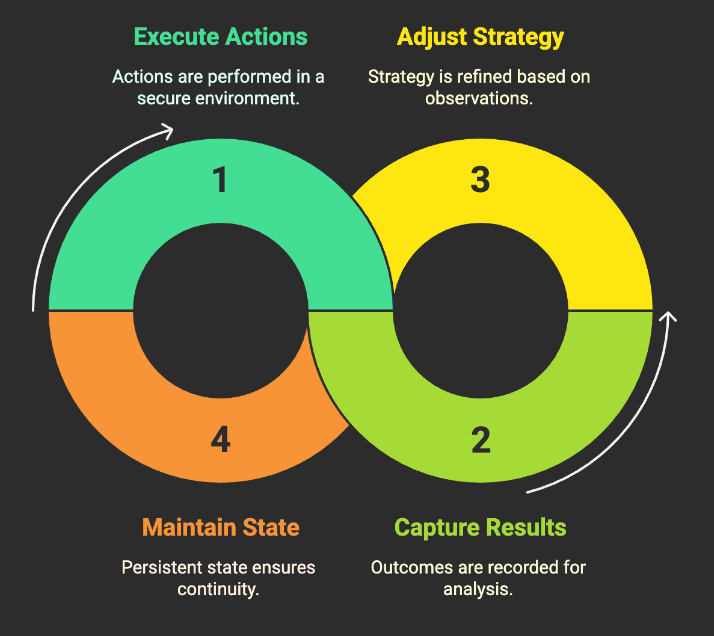

A crucial feature is that this plan is not static. Observations and results from executed tasks are fed back into the planner, dynamically adjusted, handle unexpected errors, and refine the strategy in real-time. This iterative process is what makes the agent more autonomous.

3. Perform : Taking Action

This is the final phase where the agent translates its plan into action and executing the blueprint within a secure, sandboxed environment. This is where the agent interacts with its digital environment whether that's the filesystem, a web browser, or an external API.

The process involves:

The Execution Environment (The Sandbox): All actions are executed within a highly secure and isolated virtual machine. This sandboxed environment acts as a protective boundary, preventing any unintended side effects on the host system. To further enhance security and consistency, each tool is often run in its own lightweight, ephemeral container. This ensures strict process isolation and a reproducible environment for every task.

Tool Invocation (The Action): The agent takes the parameterized tool calls from its plan and executes them as low-level commands. This could involve running a shell script, navigating a website with a browser automation tool, reading or writing a file, or calling a specific API endpoint.

Result & Observation: The outcomes of the launched tasks (e.g., shell output, browser screenshots, API responses) are captured. These “observations” are then fed back into the agent loop, serving as critical input to adjust its strategy.

Persistent State Management : To support complex, multi-step workflows, the environment maintains persistent state for key components like the filesystem and browser sessions. This ensures continuity, allowing the agent to perform a series of related actions, such as logging into a website in one step and then navigating to another page in the next.

If you found my engineer’s perspective on Software Agents and the 3P Architecture valuable, consider subscribing for more insights.

Insightful and well written.