Introduction: System 1

A “standard LLMs” are trained to predict the next word based on patterns in data. It is good at pattern recognition, summarization, and generating contextually appropriate text. It operates on intuition and probability of predicting the most likely next word. LLMs are knowledgeable, not necessarily logical.

This is like our brain's "System 1", thinking fast, automatic, and intuitive.

While these standard Large Language Models (LLMs) are good at these tasks, a new category of AI Models are emerged that can actually think through problems step-by-step.

These are called Reasoning Models a.k.a Large Reasoning Models ( LRMs )

In this post, I’ll break down what they are, why they are more critical than regular LLMs, and the basic technical architectures that power these models’ thinking process. After reading this article, I hope you will clearly distinguish between them and use the right model terms when discussing with others.

Reasoning Models: System 2

1. Overview

Reasoning models in AI are systems designed to go beyond pattern matching. They mimic human-like logical steps to solve complex problems, make decisions and inferences. Reasoning models aim to think through tasks by breaking them into intermediate steps.

This is like our brain's "System 2", thinking slow, deliberate, and analytical.

“By extending the capabilities of standard large language models (LLMs) with sophisticated reasoning mechanisms, RLMs have emerged as the new cornerstone of cutting-edge AI, bringing us closer to AGI.”

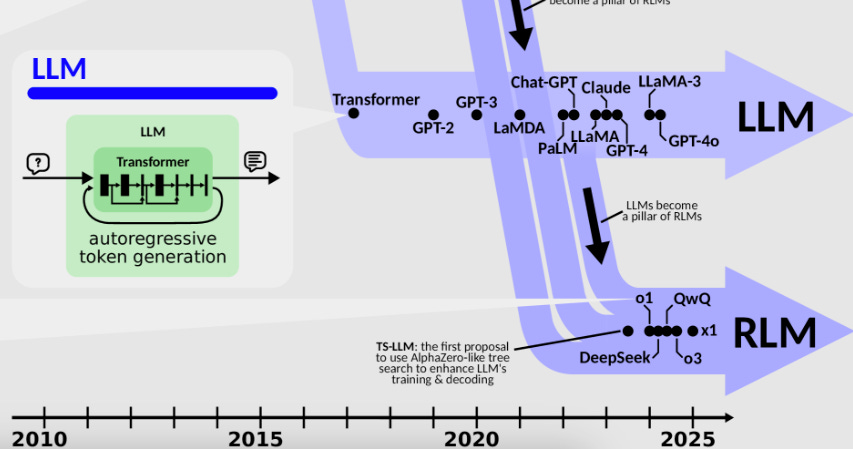

The image above illustrates how LLMs branch into RLMs with thinking capabilities. and become a foundational pillar of RLMs.

2. How the Model Responds: A Famous Example

Rs in Strawberry

Still some LLMs might say "2 Rs" in strawberry due to tokenization problem, especially in older models or when input is broken into subword tokens strangely.

In tokenization , the word

strawberrymight get split into something like“straw”, “berry”.If the model is analyzing tokens as chunks, and not individual letters, it might overlook repeated characters across subwords and also when prompt are not clear. Modern LLMs don’t really have this issues.

In the below sequence diagram ( Figure B ), See the response difference between standard LLMs and RLMs.

Mermaid Code : https://github.com/kannandreams/when-engineers-meet-ai

This is much better, isn’t it? The model shows its reasoning one step at a time. If there’s a mistake, we can easily see where it went wrong and help fix it with an additional prompt. These models are often integrated into agentic frameworks like LangGraph, Autogen, CrewAI, and OpenAgents.

3. Extrapolation > Interpolation

LLMs makes them great at staying within familiar territory. They’re good at repeating or mixing what they already know. This is called interpolation i.e working within known patterns. But they struggle to go beyond that and come up with truly new ideas.

RLMs are built to explore and reason, which helps them go beyond their training. This is called extrapolation i.e reaching into unknown areas to solve problems.

4. Types of Reasoning Style

Though all of the above screenshots examples using reasoning models, I wanted to highlight that the reasoning is not explicit by design, and the “thinking” process is not clearly visible.

Implicit reasoning models solve problems internally without showing their thought process. They take a prompt or questions, process it through their hidden layers, and directly produce an answer but we don’t get to see how they got there ( Figure C - left side ). This is like someone giving you the solution to a math problem without showing the steps. It’s fast, but if the answer is wrong or unclear, it’s hard to know why.

Explicit reasoning models solve problems by thinking through them step by step, and they show their reasoning process clearly ( Figure C - right side ). These models often use structured techniques like chain-of-thought prompting or decision trees, breaking down complex problems into smaller parts before arriving at a final answer.

5. The Technical Architecture

How Do They “Think” ?

There are various Reasoning Strategies like Chain-of-Thought (CoT), Zero-Shot CoT, Few-Shot CoT, Tree of Thoughts (ToT)

Advanced Prompting Techniques

This approach uses the same underlying LLM but guides it with a carefully structured prompt to force a step-by-step process.

Chain-of-Thought (CoT): The simplest technique. Instead of just asking for an answer, you instruct the model to “think step-by-step” or “show your work.” This simple prompt dramatically improves performance on reasoning tasks by forcing the model to slow down and externalize its process.

Tree-of-Thoughts (ToT): An evolution of CoT. The model explores multiple reasoning paths simultaneously. If one path leads to a contradiction or a dead end, it can backtrack and try another, much like a human exploring different hypotheses.

Self-Correction / Reflection: The model generates an initial answer and then, in a second step, is prompted to critique its own work. It acts as its own reviewer, identifying flaws in its logic and then attempting to generate a new, improved answer based on that critique.

Modular & Tool-Augmented

This is one of the most powerful and practical approaches today. The LLM acts as a central “controller” that can use external, specialized tools to perform tasks it's bad at. The architecture looks like below example

User Prompt: “What's the weather in Paris and what should I wear today?”

This hybrid approach combines the LLM’s language understanding with the deterministic output of specialized tools that overcome the LLM's weaknesses.

Planning + Execution Models

Agent-like architectures that separate planning and execution components. They generate structured plans, decompose complex tasks, use external tools, manage memory, and carry out actions step by step.

6. Challenges / Limitations

Appendix*

I’ve come across some exceptional articles that explore reasoning in language models with remarkable clarity and depth, both in explanation and visuals. Honestly, I don’t think I could do justice by trying to explain or create them myself.

and have done a brilliant job unpacking how reasoning works. I highly recommend reading their posts and following them to learn from true experts.

Small Reasoning Models to try locally