Let’s assume you’ve built a high-accuracy NLP model using a combination of transformers where you average the predictions to get better results (a.k.a ensemble approach ). It performs best and delivers impressive results.

But there’s a catch, inference is slow, memory usage is high, and it’s nearly impossible to deploy on mobile or in real-time applications.

For instance, serving a single 175 billion LLM requires at least 350GB of GPU memory using powerful Infrastructure.

Previously, we explored techniques like quantization, where we compress the model by reducing the precision of its weights. While that helps optimize inference and reduce memory footprint, it doesn’t change the model’s architecture.

What we need is a new kind of solution that gives us a model that is:

That’s exactly where Knowledge Distillation comes in.

Knowledge Distillation

Knowledge Distillation is a machine learning technique where a small, compact model (the “student” ) is trained to mimic the behavior of a larger, pre-trained model (the “teacher”). The goal is to transfer the knowledge from the teacher to the student, enabling the student to achieve a similar level of performance while being significantly smaller, faster, and more efficient.

Knowledge Distillation can be considered a form of Transfer Learning, but with some specific characteristics.

Just like Encoder-Decoder is a common architecture in sequence-to-sequence models, Knowledge Distillation follows a Teacher-Student architecture.

How is it Different from Quantization?

Before jumping in, I want to quickly highlight how Knowledge Distillation is different from quantization, since we explored quantization in previous posts.

Knowledge Distillation is often mentioned alongside other model compression techniques, most notably Quantization. While both aim to make models more efficient, they do so in fundamentally different ways.

The table below outlines the key distinctions between the two concepts.

History : Where Did Knowledge Distillation Begin?

This technique was proposed by Geoffrey Hinton, Oriol Vinyals, and Jeff Dean in their 2015 paper: Distilling the Knowledge in a Neural Network.

This paper formally introduced the term “distillation” in deep learning and laid the foundation for many modern student-teacher training approaches in model compression, especially for neural networks and LLMs.

Spot it ! : Can you find them in the picture? This image features many of the top researchers who have made significant contributions to AI.

ELI5 : The Professor and the High School Student

Imagine a top university professor like someone from the above image who has spent decades studying a complex subject. This professor is our teacher model. They have an immense and detailed understanding of the topic.

Now, imagine a bright high school student. This student is our student model. They have a limited knowledge capacity (fewer parameters) and haven’t read all the books or research papers, the professor has.

There are two ways the professor can teach the student for a final exam:

Traditional Training (Hard Labels):

The professor gives the student a list of questions and the final, correct answers. “The answer to question 1 is A. The answer to question 2 is C, etc.”. The student memorizes the answers. They might do well on the exam if the questions are identical, but they won't truly understand the subject and will fail if the questions are slightly different.

Knowledge Distillation (Soft Labels):

The professor sits with the student and explains their thought process."The answer to question 1 is A, and here’s why. B is also a probable answer, but it's wrong because of this small difference . C is completely incorrect for these reasons."

In this second method, the student isn’t just learning the correct answer; they are learning the reasoning behind it. They are learning how the professor thinks. This rich, contextual information allows the student to build a much more robust and generalized understanding, performing far better than their limited capacity.

How does knowledge distillation work?

Basically, a knowledge distillation system is composed of three key components and play the crucial role in the student learning.

Knowledge

Distillation algorithm

Teacher-Student architecture.

The overview diagram above show a complete breakdown of Knowledge Distillation branching into types of knowledge , distillation methods, core algorithms , and practical applications.

In this diagram:

The dotted arrows represent the flow of feature knowledge from teacher to student. Pink boxes represent input layers, blue boxes show hidden layers where complex features are learned, and green boxes indicates output layers

Soft targets refer to the probability distributions learned by the teacher model, while feature knowledge represents the intermediate representations learned in hidden layers

Knowledge distillation operates through 3 fundamental types of knowledge transfer mechanisms. Each type of knowledge serves a specific purpose in the distillation process, and they can be combined to achieve better results. The choice of knowledge type depends on the specific application and requirements of the student model.

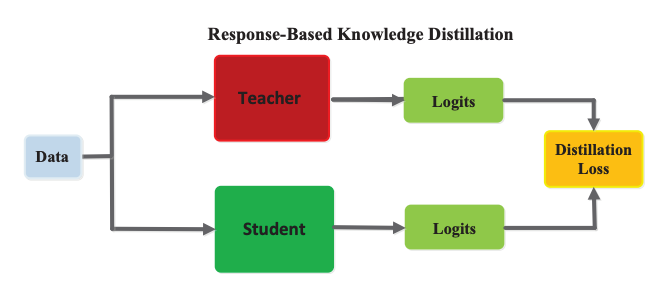

1. Response-Based Knowledge

The student copies the teacher’s final answers on the test.

Focuses on matching the teacher model’s final predictions

Uses soft targets (probability distributions) rather than hard labels

Helps student model learn the teacher's decision-making patterns

Input Data is passed to both the Teacher and Student models.

Each model outputs logits (the raw outputs before softmax).

✏️ Logits - It is raw output scores produced by a neural network’s final layer before applying softmax (or any activation function). Logits contain richer information than just the final predicted class. Softmax turns logits into probabilities, but for distillation, we often compare logits.

For example, instead of just saying “this is a cat,” It says:

Logit = cat: 5.1, dog: 4.8, fox: 1.2

Softmax Probabilities = cat: 56.7%, dog: 42.1%, fox: 1.2%The Student's logits are compared to the Teacher's logits.

The difference between them is used to calculate the Distillation Loss. This loss guides the Student model to mimic the output behavior of the Teacher.

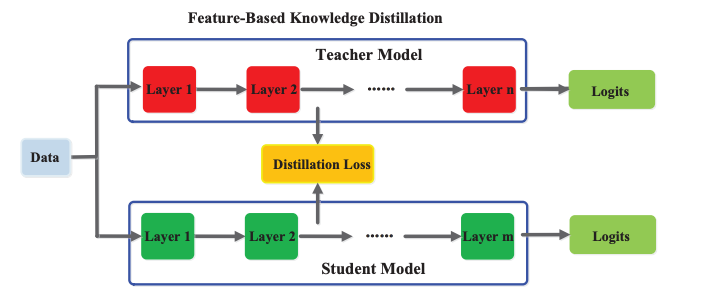

Feature-Based Knowledge

The student watches how the teacher solves the problem step by step.

In this knowledge type, student not only mimics the output logits but also learns from the intermediate feature representations of the teacher model.

In large models, a lot of useful knowledge is hidden in intermediate layers, not just the final output.

This method is especially useful in vision and language models, where early and mid-layer features encode essential information like edges, syntax, etc.

Input Data is passed to both the Teacher and Student models.

The models consist of multiple layers like Layer 1, Layer 2, … Layer n.

Instead of only comparing the final logits, this method compares the features (activations) from selected intermediate layers and are used to compute the Distillation Loss.

✏️ Feature Maps / Activations are the output of a specific intermediate layer in the network. Captures learned representation of the input.

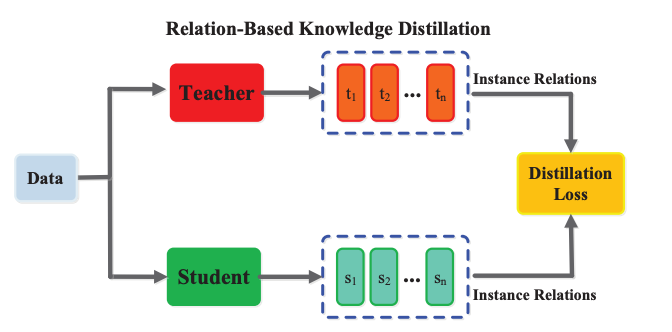

Relation-Based Knowledge

The student observes how the teacher compares different topics or problems like how similar two math problems are or which concepts are connected.

It is more advanced technique where the student learns not just from the teacher's outputs or features, but also from the relationships between instances learned by the teacher.

Input Data is fed to both the Teacher and Student models.

Instead of just focusing on each sample’s output (like logits or features), this method extracts a set of intermediate representations from the Teacher (e.g. t1,t2,...,tn) and from the Student (e.g. s1,s2,...,sn).

Then computes instance-to-instance relationships. These instance relations distances similarities are computed for both Teacher and Student.

The Distillation Loss compares these relationships encouraging the student to preserve the same structural understanding of the input space as the teacher.

✏️ Instance Representation : A vector representing a sample at a certain layer (could be output, embedding, or feature map).

✏️ Instance Relations : Similarity or distance measures between pairs of instances (e.g., cosine similarity, Euclidean distance).

Training Methods

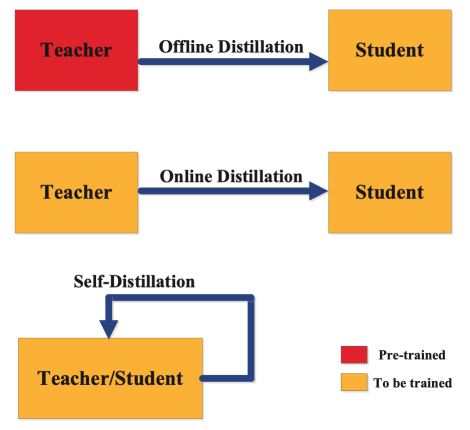

Offline Distillation: The process starts with a fully pre-trained teacher model whose weights are kept frozen during the student’s training. This is the most common and straightforward form of knowledge distillation, widely used when a strong, pre-trained teacher is available.

Online Distillation: involves training both the teacher and student models simultaneously. Instead of relying on a pre-trained teacher, both models learn and improve together, often sharing knowledge dynamically during training. This approach is particularly useful in scenarios where a pre-trained teacher isn’t available or when models are learning from evolving data streams.

Self-Distillation: is a special case where a single model acts as both teacher and student. This technique helps improve generalization even without introducing an external teacher model.

Libraries/Distilled Models

Hugging Face Transformers provides many pre-distilled models (like DistilBERT, TinyBERT).

DistilBERT is a prime example of a distilled model. It is 40% smaller than BERT, 60% faster, and retains 97% of its language understanding capabilities.

TorchDistill is lightweight, modular PyTorch distillation framework.